Server, Docker or Serverless: A Small Business Guide to App Infrastructure

Having helped dozens of small and medium businesses choose the right infrastructure for their applications, I’ve learned that the “best” technology isn’t always the most advanced one - it’s the one that fits your team, budget, and business needs.

Whether you’re launching your first web app or scaling an existing business tool, understanding these three infrastructure approaches can save you thousands in costs and countless hours of headaches.

The Real Question: What Do You Actually Need?

Before diving into technical details, let’s start with the business questions that actually matter:

- Budget: How much can you spend monthly on hosting and management?

- Team: Do you have technical staff or are you outsourcing development?

- Scale: Are you serving 100 users or 100,000?

- Growth: Will your traffic be steady or highly variable?

- Timeline: Do you need to launch quickly or can you invest in complex setup?

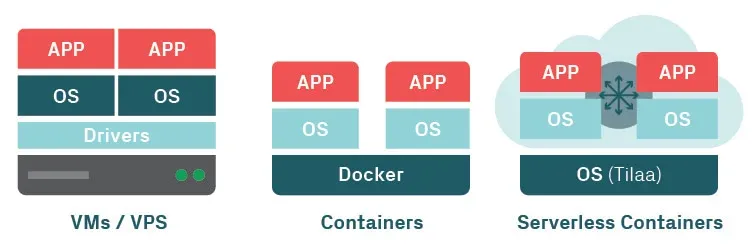

Traditional Servers: The Reliable Workhorse

What it is: Your app runs on a dedicated virtual machine (like AWS EC2, DigitalOcean droplets, or Linode instances).

Perfect for:

- Steady traffic with predictable usage patterns

- Budget-conscious businesses ($20-100/month range)

- Simple applications like business websites, internal tools, or APIs

- Teams with some technical knowledge or reliable developers

Real-world costs:

- Small business app: $20-50/month

- Growing SaaS: $100-500/month

- High-traffic application: $500-2000/month

The trade-off: Lower monthly costs but requires someone who can manage Linux servers, handle updates, and configure scaling.

Docker: Containerization Revolution

Docker has revolutionized how we package and deploy applications. It provides:

- Consistent environments across development and production

- Easy scaling through container orchestration

- Quick recovery from failures

- Isolation between services

The Docker ecosystem typically involves:

- Container images for your applications

- Orchestration tools (like Kubernetes)

- Separate containers for different services (databases, APIs, UIs)

Serverless: The Future of Computing?

Serverless computing comes in two main flavors:

-

Lambda-style Functions

- On-demand execution

- Pay-per-use pricing

- Support for various programming languages

- Cold start considerations

-

Edge Functions (like Cloudflare Workers)

- Ultra-low latency

- JavaScript/TypeScript focused

- Distributed execution

- Limited execution time and size

Making the Right Choice

The choice between these approaches depends on several factors:

When to Choose Traditional Servers

- Complex applications requiring significant resources

- Applications needing persistent memory

- Cost-sensitive projects with predictable workloads

- When you have Linux expertise in your team

When to Choose Docker

- Microservices architectures

- Complex software landscapes

- When you need consistent environments

- Applications requiring multiple services

When to Choose Serverless

- Simple, stateless functions

- Event-driven applications

- Background jobs

- Applications requiring global distribution

- When you want to minimize operational overhead

Best Practices and Considerations

-

Start Simple

- Begin with the simplest solution that meets your needs

- Avoid over-engineering early in the project

-

Consider Your Team

- Factor in your team’s expertise

- Consider the learning curve of each approach

-

Cost Analysis

- Compare long-term costs

- Consider both development and operational costs

-

Scalability Requirements

- Evaluate your expected growth

- Consider peak vs. average load

Key Takeaways

- There’s no one-size-fits-all solution

- Each approach has its strengths and weaknesses

- Consider your specific use case and requirements

- Factor in your team’s expertise and experience

- Start simple and evolve as needed

Moving Forward

The infrastructure landscape continues to evolve, with new tools and approaches emerging regularly. The key is to stay informed and choose the right tool for your specific needs. Remember that you can always migrate between approaches as your requirements change.

Call to Action

Have you made a choice between Docker and serverless for your projects? Share your experiences and challenges in the comments below. If you’re still deciding, consider starting with a small proof of concept using each approach to better understand their implications for your specific use case.